My qualitative analysis on a small sample of the prediction results shows that only a few of the hate labeled tweets are "hate speech." Most of them present vicious prejudices and stereotypes about the behavior of Chinese people.

The algorithm labeled many tweets containing political words as hate speech. Most of those tweets aggressively condemn the Chinese Communist Party or the Chinese government but not Chinese people.

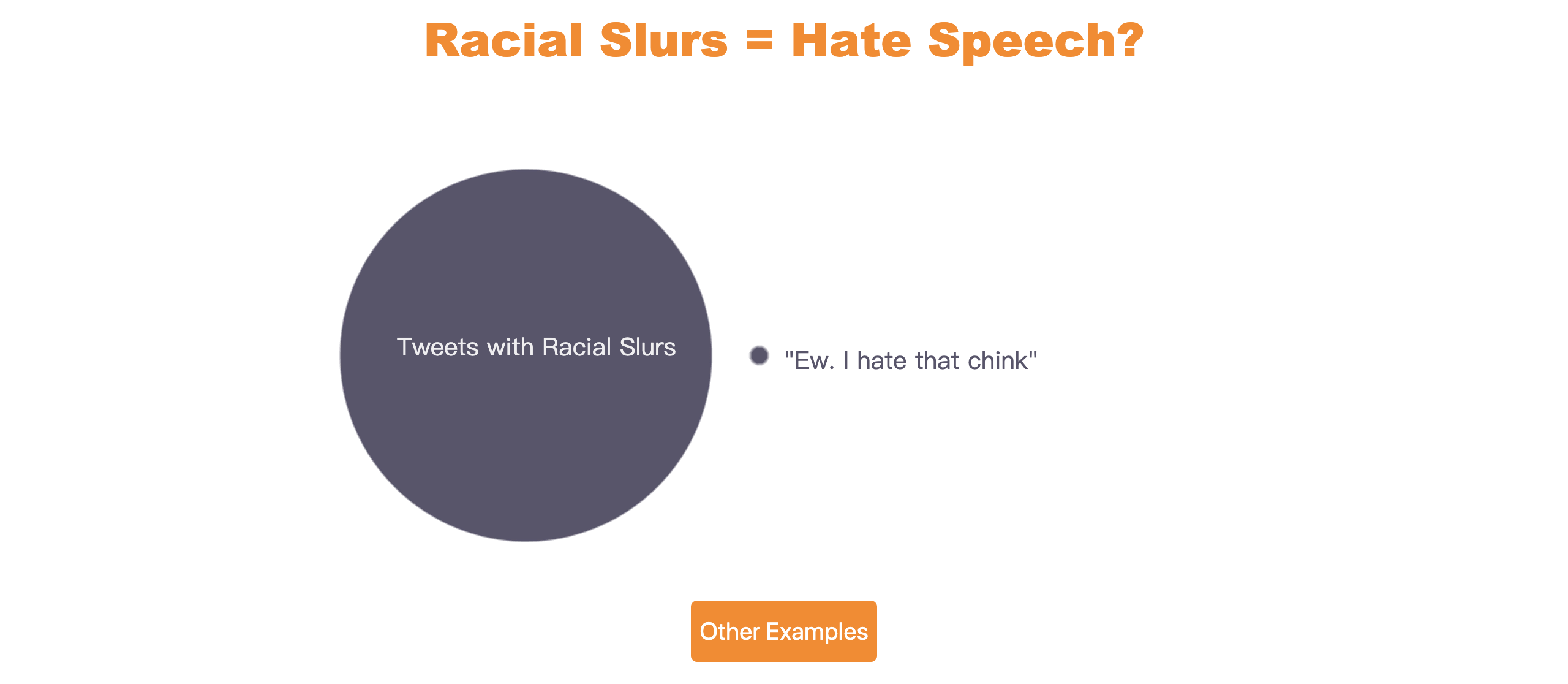

Tweets containing racial slurs like “Ching Chong” or “chink” are more likely to be labeled as “hate speech.” However, containing racial slurs doesn’t necessarily mean that the tweet is derogatory. Many Twitter users use these racial slurs as an in-group slang to call each other.

Tweets mentioning both Chinese food and aggressive words, which happens a lot when people are hungry, have higher chances to be labeled as hate speech or offensive.

My model is based on an supervised machine learning method, which is fast and simple but not complex enough to understand the subtle meanings of language in different contexts. Many researchers and experts are using a more well-designed method to train their algorithms, which might produce better results in detecting hate speech.

Personal Project

Data Analysis, Design and Development

Sep 2019 — Nov 2019